Automating Claims and Payment Status Inquiries in Healthcare

Business Problem

Business Solution

Technical Solution

Technologies

In this case study, a variety of technologies were used to implement a big data solution. Hadoop and Spark were utilized for distributed storage and processing of large amounts of data. Scala was the programming language used to write the code for the application. Cloudera was the distribution of Hadoop used, providing a complete big data platform. Informatica was used for data integration and Microservices architecture was used to build the application. Python was used for data processing and Tensorflow was used for machine learning tasks. ERWIN Data Modeler was used for data modeling and ErWin Web-Portal was used to access the data model. Atlassian FishEye and Crucible were used for code review and collaboration.

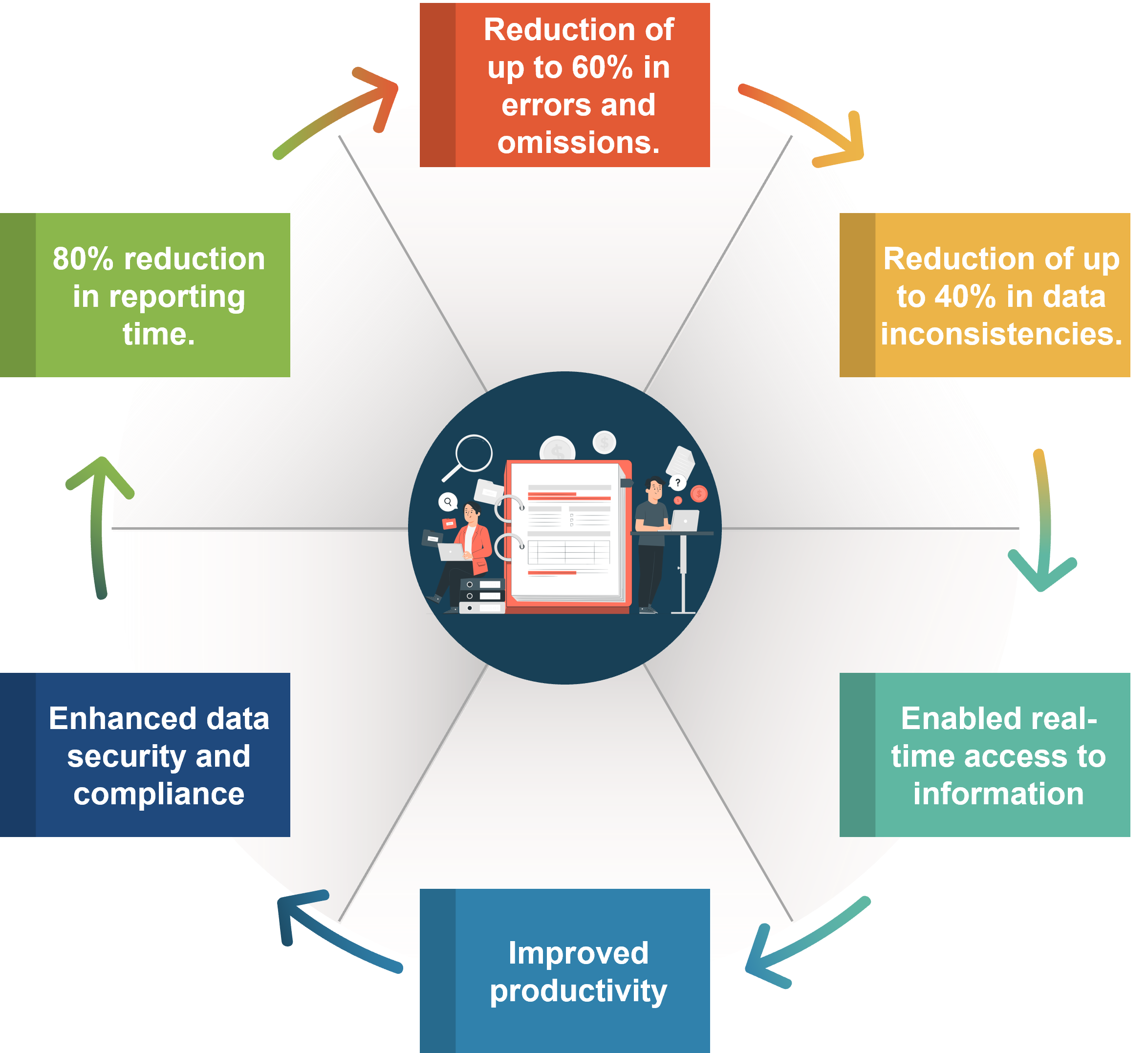

Customer Success Outcomes

The following are the quantified outcomes for the case study of implementing big data analytics for capital analysis for a major financial services company:

Latest Case Studies

Our Case Studies

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.png)

.png)

.jpg)

.jpg)

.png)

.png)

.png)

.png)

.png)

.png)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)